Week 13. Midterm Plan

Tactile Labyrinth remaining steps.

Project Overview

A tangible spherical object with LED lights forming a labyrinth pattern that responds to physical interaction (turning, tilting, etc.).

Hardware Requirements

- Seeed Studio XIAO RP2040 microcontroller

- 6DoF accelerometer and gyroscope – Adafruit LSM6DSOX with STEMMA QT

- LED rope with 250 addressable RGB LEDs – WS2812B chip

- Power supply (USB/battery considerations)

- Tactile housing/structure to support the LED rope in a spherical pattern

- Button input (existing in current code)

Libraries

- FastLED - For controlling the LED string

- Wire - For I2C communication with the accelerometer

- Adafruit_LSM6DS - For the specific accelerometer/gyroscope

- Adafruit_Sensor - Required for the LSM6DS library

Core Functionality Requirements

-

Map the physical layout of the LED rope around the sphere

- How is the rope physically arranged? Linear mapping or something more complex?

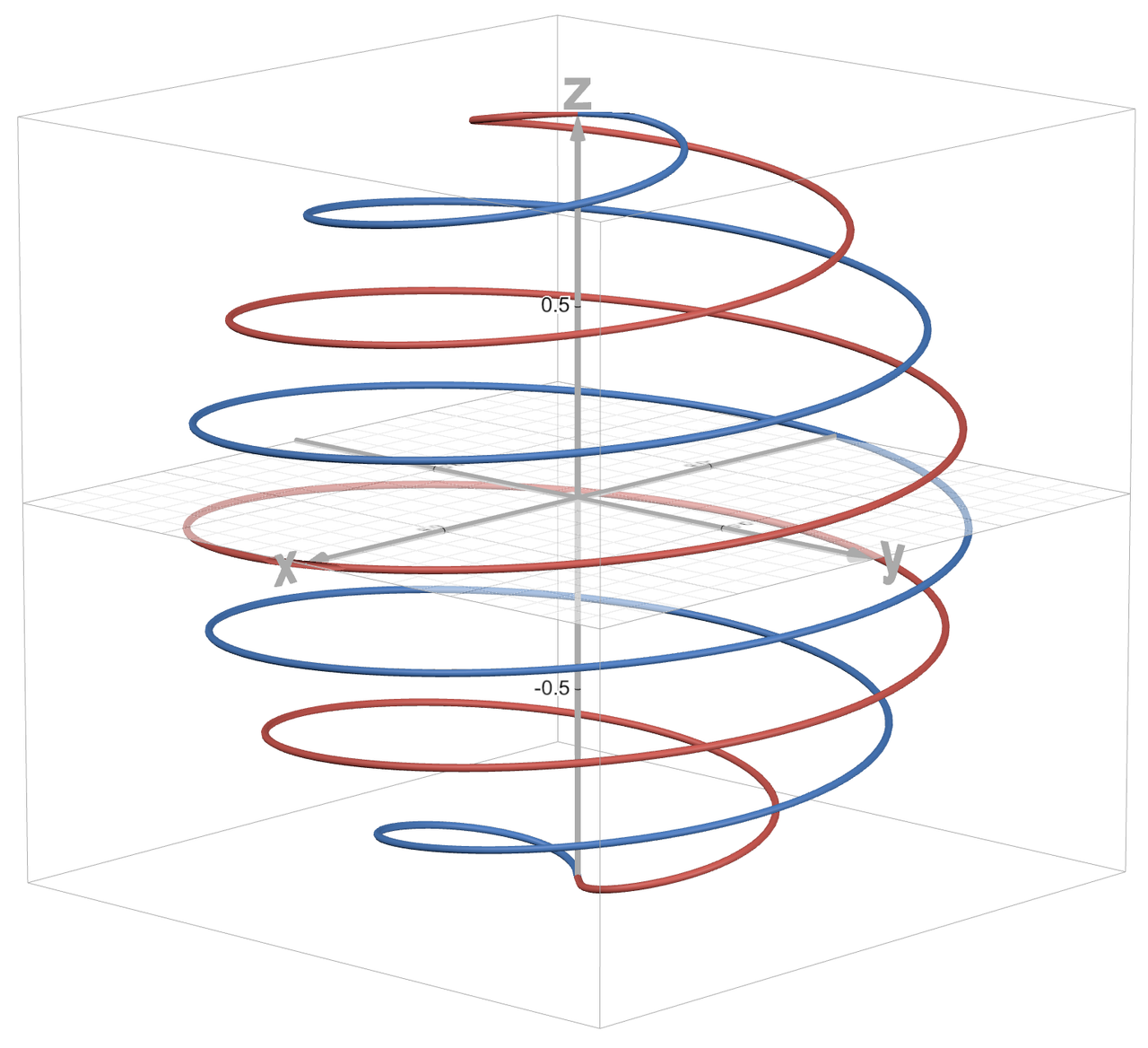

- It starts at one pole, wraps around the sphere in a coil to the other pole, then doubles back and goes between the first coil, without overlapping, back to the first pole. So it starts and ends at the same place.

- I think that I can make a sparse array with polar positions at arbitrary indexes, and the other positions interpolated.

- Does the LED indexing follow the actual labyrinth pattern logically?

- Yes. As the LED rope wraps around the ball, that path (index 0 to 249) is the path of the labyrinth in animations.

- How is the rope physically arranged? Linear mapping or something more complex?

-

Motion-based interaction

- How should the sphere respond when tilted in different directions?

- For the first step, color the bottom of the sphere blue, and the top green.

- This should work at any orientation.

- What data from the accelerometer/gyroscope is most relevant (orientation vs. movement)?

- At first, orientation.

- How quickly should the LEDs respond to movement?

- Should feel very responsive, like turning a ball filled halfway with water. But it's a little planet. :)

- How should the sphere respond when tilted in different directions?

-

Animation patterns

- What types of animations should be implemented?

- Simple to start. Maybe the animation code could feel like shader code. A function of the index, polar position, and time returns a color. The function called once per pixel.

- Should different areas of the labyrinth behave differently?

- How to implement the "character" moving along the labyrinth path?

- Later, our "pilgrim pixel" will walk along the rope, and goal will be to keep him on top.

- We don't need to program an error or game over. Just keep the one pixel moving at a slow steady pace.

- What types of animations should be implemented?

-

Game mechanics – think about this section later

- How does the "character" (represented by one LED) navigate the labyrinth?

- What are the winning/losing conditions?

- Is there a time element or challenge?

- How does the "water" concept integrate (mentioned in the blue hemisphere idea)?

Technical Questions to Resolve

-

Power requirements and optimization

- How to manage battery life if portable?

- Integrate a battery bank that has its own charging. Make sure case has sufficient airflow.

- How to optimize brightness vs. power consumption?

- Single

BRIGHTNESSin the code

- Single

- How to manage battery life if portable?

-

Memory considerations

- Will the RP2040 have enough memory for all animations and states?

- How to efficiently store and access the LED mapping?

-

Performance concerns

- What's the optimal refresh rate for smooth animations?

- Try 100hz

- How to handle multiple simultaneous processes (reading sensors, updating LEDs)?

- By adding a

setup1()andloop1()function to your sketch you can make use of the second core. Anything called from within thesetup1()orloop1()routines will execute on the second core.setup()andsetup1()will be called at the same time, and theloop()orloop1()will be started as soon as the core’ssetup()completes (i.e. not necessarily simultaneously!).

- By adding a

- What's the optimal refresh rate for smooth animations?

-

Calibration process

- How will the device be calibrated for orientation?

- Does it need a "home" position?

- Position at startup will be defined as up.

UX Considerations

-

Feedback mechanisms

- How does the user know their actions are affecting the system?

- What visual cues indicate progress or state changes?

-

Intuitiveness

- How can the interaction be made immediately understandable?

- Is there a learning curve, and if so, how to minimize it?

-

Engagement

- What makes the experience engaging beyond the initial novelty?

- How to encourage continued interaction?

Physical Design Questions

-

Structure

- What material for the final sphere structure?

- How to ensure the LEDs stay fixed in the labyrinth pattern?

- How to provide access to components for maintenance?

-

Tactile elements

- Beyond the LED rope, what other tactile elements could enhance the experience?

- Should the surface have texture or relief?

-

Size considerations

- What is the optimal diameter for handheld interaction?

- Weight considerations for comfortable handling?

Future Development Paths

-

Connectivity

- Could it connect to other devices or installations?

- Potential for multiplayer or social elements?

-

Customization

- User-defined patterns or games?

- Different difficulty levels or modes?

-

Additional sensors

- Would other types of sensors add meaningful interaction?

- Proximity sensors, touch sensors, etc.?

Implementation Plan

-

Prioritized feature list

- Core functionality vs. "nice to have" features

- Minimum viable product definition

-

Testing methodology

- How to test different aspects (hardware, software, user experience)

- What metrics define success?

-

Development phases

- What's the logical order of implementation?

- Key milestones and dependencies?

Planning prompt

> Here's my concept for a tangible labyrinth.

> ./tactile-labyrinth.md

> And here's the very early Arduino code that I have.

> ./tactile-labyrinth/TactileLabyrinthByForresto.ino

> Help me make a structure for a readme.md file to fill in all of the unknowns for the project to make a full spec. Add sections as needed, and bulletpoint questions to answer. Don't write code until the readme is ready.

> ./13-midterm-plan.mdSent to Claude 3.7 "Thinking" via Zed.

Prototype integration progress

LED Mapping

Arduino test code: TactileLabyrinthMappingTest.ino

Desmos graph with approximate LED path.

// Function to calculate position for LED along the double coil

void getPosition(float s, float& x, float& y, float& z) {

const float a = 3.75; // Controls number of turns

// Determine which half we're in

float sign = (s < 0.5) ? 1.0 : -1.0;

// Map s to t (0-1) for each half

float t = (s < 0.5) ? 2.0*s : 2.0*(1.0-s);

// Calculate position using the formula

x = sign * sqrt(1.0-t*t) * cos(a*PI*t);

y = sqrt(1.0-t*t) * sin(a*PI*t);

z = sign * t;

}Should I precalculate all of the indexes (bigger program memory, smaller startup cost, same running memory) or do this calculation on board on startup?

Analysis of Tradeoffs (Claude 3.7)

| Factor | Precalculated in Flash | Calculated at Startup |

|---|---|---|

| Program Size | +3,000 bytes in flash | +150-200 bytes in flash |

| RAM Usage | Same (3,000 bytes) | Same (3,000 bytes) |

| Startup Time | Immediate | ~5-10ms on RP2040 |

| Flexibility | Must recompile to change | Can modify parameters easily |

Why Runtime Calculation Is Better for This Project.

-

Memory is not a constraint here

- The RP2040 has 264KB RAM and 2MB flash

- 3,000 bytes is only ~1% of available RAM

-

RP2040 has hardware floating-point

- Floating-point calculations are relatively fast

- The startup delay will be imperceptible to users

-

Development advantages

- Easier to experiment with different patterns

- Can modify parameters without recompiling

- Code is more self-documenting

-

Future flexibility

- Potentially store parameters in flash or read from sensors

- Easier to implement different patterns or animations

- Could allow for dynamic reconfiguration

Rethinking mapping

Let's remake this into an interactive process. Using serial commands, we can skip to any LED by index. Then I can find LEDs at key points on the sphere, and create an array. These key points will use polar coordinates, because these are easier to estimate on the ball. Then positions will be calculated from those key points, and interpolated for the rest of the LEDs indecies.

How to Use This Interactive Mapping System (Claude 3.7)

TactileLabyrinthManualMapping.ino

-

Connect to Serial Monitor (115200 baud)

-

Navigate to LEDs:

led 123- Jump to LED #123next- Go to next LEDprev- Go to previous LED

-

Store Key Points:

- Find a LED at a known position on your sphere

- Use

key index theta phito store it (example:key 0 0 0for North Pole) - Theta is the longitude (0 to 2π), phi is the latitude (0 to π from North to South)

-

Other Commands:

list- Show all mapped key pointsreset- Clear all key pointsinterpolate- Calculate all LED positions from your key pointsmode- Toggle between mapping and display modesdisplay- Cycle display modes (all cardinal points, or individual points)

-

Recommended Key Points to Map:

- North pole: theta doesn't matter, phi=0

- South pole: theta doesn't matter, phi=π

- Points around the equator (phi=π/2) at various theta values

- Some points between equator and poles

The button on the device toggles between mapping mode and display mode.

Abstracting mapping

Now I want to abstract the positioning logic so that it can be reused between arduino projects. What's a good strategy for that?

Simple Header File Approach (Claude)

Perfect, let's create a simple, ready-to-include header file with your key points built in. This is a faster approach and makes sense when you're working with the same physical object across different projects.

Here's a complete, standalone header file that you can include in any Arduino project:

How to use this in your projects:

- Save the above code as

SphericalLEDMap.hin your Arduino project folder or libraries folder - Include it in your sketch

Integration with motion detection

Arduino test code: TactileLabyrinthGravityTest.ino./tactile-labyrinth/TactileLabyrinthGravityTest/TactileLabyrinthGravityTest.ino)

Which sensors would be cooler, accel and mag, or accel and gyro? Eventually it would be cool to toss and spin the ball, and have the lights react fast enough to do a persistnace of vision effect, keeping colors locked in space as the ball spins in the air. Will latency be fast enough with these chips and sensors?

Latency Analysis for POV Effects (Claude 3.7)

For persistence of vision effects where colors need to stay locked in space as the ball spins (especially during tosses), an accelerometer + gyroscope combination is significantly better:

Why Gyroscope is Crucial:

- Rotation detection: Gyroscopes directly measure rotational velocity - exactly what you need

- Speed: Much faster response time (400Hz-1.6kHz) compared to magnetometers (10-100Hz)

- Precision: More accurate for fast motions and spins

- Less interference: Magnetometers are affected by nearby electronics and metals

For POV to work, your system needs to update faster than the human eye can perceive the change in position:

| Component | Time | Note |

|---|---|---|

| Sensor reading | ~2.4ms | At 416Hz data rate (LSM6DSOX) |

| Processing | ~2ms | Vector math for 250 LEDs |

| LED update | ~7.5ms | Time to push data to 250 WS2812B LEDs |

| Total latency | ~12ms |

Is this fast enough? Yes, with some limitations:

- A ball spinning at 2 rotations/second (fairly fast) rotates 7.2° in 10ms

- Human visual persistence is roughly 1/30 second (33ms)

- Your system should be fast enough to maintain the illusion

Matching accelerometer orientation to LED map

XYZ on the mapping don't match XYZ of the accelerometer data. Make a simple way to configure the orientation in software to match the physical layout.

Accelerometer Configuration Solution (Claude)

You need a way to match the physical orientation of your accelerometer with the XYZ coordinate system you're using for LED mapping. Let's implement a solution that allows you to configure the axis orientation in software.

// Configure these to match your physical orientation

AxisMapping ACCEL_X_MAPS_TO = AXIS_POSITIVE_X; // Which sensor axis maps to your X

AxisMapping ACCEL_Y_MAPS_TO = AXIS_NEGATIVE_Z; // Which sensor axis maps to your Y

AxisMapping ACCEL_Z_MAPS_TO = AXIS_POSITIVE_Y; // Which sensor axis maps to your Z(Took some trial and error, but they are matched now!)

Add the walking pixel

Let's add the wandering monk. A white pixel that steps along the LED rope with a rhythm. Step, step, step step step. The person holding the object will be responsible to keep the monk on the top of the ball. They'll stop walking and if they enter the bottom of the ball. Or maybe it turns into a black pixel and keeps going, since the bottom of the ball is always lit up. This will be added to the existing Gravity mode, and be renamed Monk Pixel Mode. Keep the current gravity colors, just add the monk pixel.

Response

// Monk pixel variables

int monkPosition = 120;

unsigned long lastMonkStep = 0;

#define MONK_STEP_PATTERN_LENGTH 5

int monkStepPattern[MONK_STEP_PATTERN_LENGTH] = {500, 500, 500, 250, 250}; // step, step, step step step timing in ms

int monkStepPatternIndex = 0;

// ... the rest of the fileThe monk now walks along the LED strand with the specified rhythm pattern. When it's on the top half of the sphere (according to gravity), it appears as a white pixel. When it enters the bottom half, it turns into a black pixel, which creates a visual effect of disappearing (since the bottom is always lit).

Animation detail idea

Make the total time of the step pattern change over time. As fast as 20 pixels per second, and as slow as 5. Move smoothly to a new pace every 20 seconds. Explain this code heavily in the comments.

When black, make the neighboring 2 pixels black as well.

Dynamic Speed Control System (Response)

I've implemented a sophisticated speed control system for the monk pixel that gradually changes speeds over time. Here's a breakdown of how it works:

-

Speed Range Definition:

- The monk's speed is defined in terms of pixels per second.

MIN_SPEED= 5 pixels per second (slowest)MAX_SPEED= 50 pixels per second (fastest)

- The monk's speed is defined in terms of pixels per second.

-

Step Pattern System:

- The base step pattern (

monkStepPatternBase) defines the relative timing of steps - The actual step pattern (

monkStepPattern) scales these times based on the current speed

- The base step pattern (

-

Two-Tier Speed Control:

- Long-term changes: Every 20 seconds, a new random target speed is selected

- Smooth transitions: The speed gradually changes toward the target in small increments

-

Updating Process:

updateMonkSpeed(): Called regularly to handle speed transitions and target selectionupdateMonkStepPattern(): Recalculates the actual step timings based on the current speed

04-24 integration status

04-27 integration status

Wireless and handheld at last.

I decided to leave it on today as a battery test, and the 10k mah battery is still going after 8+ hours. Now I'm thinking that halving the battery capacity could be worth the weight savings.

- ← Previous

Week 12. Machine Building - Next →

Week 14. Molding and Casting